Figure: A Hyperscaler Data Center showing AI power servers, water infrastructure, power racks, and renewable energy sources (solar and wind).

1. Introduction: AI and the Infrastructure Behind Intelligence

The current surge in artificial intelligence (AI) capabilities—spanning large language models, generative tools, real-time translation systems, and smart city automation—depends on something less visible: a rapidly expanding physical infrastructure composed of hyperscale data centers. These data centers are not merely storage hubs or cloud relays; they are AI’s engines. While AI is often lauded for its promise to solve climate challenges, improve agriculture, and enable smarter cities, its increasing energy and resource demands cast a long environmental and social shadow.

AI models today require significant computational resources, particularly during the training phase. Training a single large model can involve processing petabytes of data across thousands of high-performance GPUs for weeks or even months. This computational intensity directly translates into increased electricity and water use—two major environmental factors tied to the siting, operation, and expansion of modern data centers.

2. Data Centers in the Age of AI

Historically, data centers were designed to house various servers that managed web hosting, email, and enterprise software. These systems, while vital, were relatively modest in terms of power and cooling requirements. However, the AI revolution has transformed this paradigm. New data centers are increasingly built to support high-density computer clusters optimized for AI model training, inference serving, and real-time data processing.

According to Zhou (2021), global data volume is expected to rise from 41 zettabytes in 2019 to 175 zettabytes by 2025. This trajectory aligns with forecasts by Reinsel et al. (2017), who anticipate data creation to reach 163 ZB by 2025, a tenfold increase from 2016. These forecasts are no longer abstract projections. They reflect real-world changes in infrastructure investment, particularly by the largest technology companies—Amazon Web Services (AWS), Microsoft Azure, Google Cloud (Alphabet), and Meta AI—commonly referred to as hyperscalers.

The architectural evolution of data centers to support AI workloads involves specialized hardware like GPUs and TPUs, which demand significantly more energy than traditional CPUs. As a result, data centers that support generative AI are now some of the most energy-intensive structures in the global infrastructure landscape.

3. Hyperscalers: The Titans of AI Infrastructure

The largest share of this growth is driven by a handful of powerful actors. Amazon, Alphabet, Microsoft, and Meta dominate the AI data center space globally. These hyperscalers build and operate large-scale cloud platforms that train AI models, manage enterprise services, and power consumer-facing applications.

By 2025, the United States hosts over 5,426 data centers, making it the most saturated nation globally in terms of digital infrastructure (Cloudscene, 2025). Within the United States, Virginia alone accounts for 13 percent of the world’s data center capacity and nearly 25 percent of total North American capacity (Sickles et al., 2024). On a global scale, the United States represents 54 percent of the total hyperscale capacity, and analysts project 130 to 140 new hyperscale data centers to be constructed annually (Synergy Research Group, 2025).

AI models deployed across hyperscale environments tend to favor geographic regions that offer favorable tax policies, cheap electricity, and moderate climate conditions. These decisions often result in clusters of massive data centers in regions that may not have the natural resources or infrastructure to sustainably support them.

4. Data Center Economics in the Age of AI: The Rising Cost of Intelligence

As AI permeates every sector—from finance and pharmaceuticals to logistics and defense—the infrastructure that enables it is rapidly scaling to meet unprecedented computational demands. At the core of this infrastructure lies the data center: the physical site where data is processed, stored, and analyzed.

But as the AI boom escalates, so too does the energy intensity and operational complexity of modern data centers. A new body of research and policy is emerging around the economic, environmental, and locational implications of AI-driven digital infrastructure. The transition from traditional computer workloads to AI-specific tasks is fundamentally altering the energy profile of data centers. Unlike conventional cloud services, AI workloads are compute-dense, requiring massive parallel processing over sustained periods. Training a large language model, for example, can consume several megawatt-hours of electricity.

Recent findings by Chen et al. (2024) show that next-generation AI workloads are increasing per-rack power demand by more than 300%. Hyperscalers such as Microsoft, Google (Alphabet), Amazon Web Services, and Meta are now racing to upgrade their global footprints with AI-ready infrastructure, characterized by higher thermal density, advanced cooling systems, and proximity to renewable energy sources.

The macroeconomic implication is clear: electricity has become a cost center, and increasingly, a constraint. In the United States, the situation is even more acute. The Department of Energy (2023) reports that data centers could account for as much as 8 percent of national electricity demand by the end of 2023. Some individual data centers now require as much energy as entire small cities. A facility with a capacity of 11,951 kilowatts can consume as much electricity as 10,000 residential homes (Bast et al., 2022). As Berger (2025) notes, energy costs in some U.S. regions now account for 40% to 60% of operational expenses in AI-dedicated data centers.

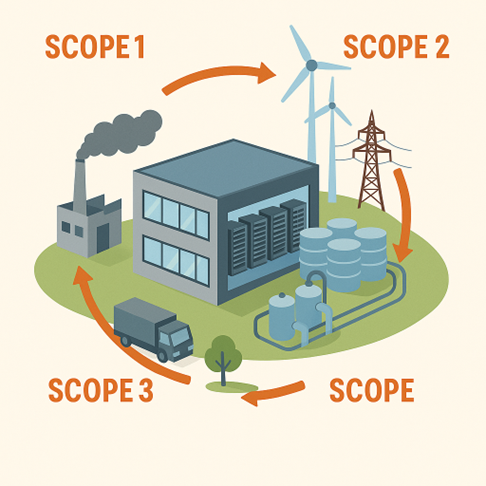

The implications for carbon emissions are profound. While hyperscalers tout their renewable energy commitments, many of their operations still rely on grid energy derived from fossil fuels. Even when renewables are part of the mix, the overall carbon intensity is not always reduced, especially during periods of peak demand. Moreover, embodied emissions—such as those from GPU manufacturing and data center construction—are rarely accounted for in corporate climate pledges (Ananth & Malige, 2024). These Scope 3 emissions, though indirect, are among the most substantial contributors to the carbon footprint of AI infrastructure.

5. Water Usage and Localized Environmental Strain

Energy is only one part of the environmental burden. Data centers also require vast quantities of water, primarily for cooling purposes and indirectly through power generation. When accounting for both direct and indirect uses, data centers can consume between 1 to 205 liters of water per gigabyte of data processed (Ristic, Madani, & Makuch, 2015).

In many cases, hyperscalers site their facilities in areas already facing water stress. According to JLARC (2017), a typical data center can consume 6.7 million gallons of water per year—comparable to a large office building. However, the aggregation of dozens or even hundreds of centers in a single region, such as Virginia or Phoenix, can significantly strain local aquifers, rivers, and municipal water systems.

Moreover, the heated wastewater discharged from data centers can alter local ecosystems, damaging aquatic biodiversity and contributing to thermal pollution. The growing demand for AI means that such facilities are unlikely to decrease in number or intensity without strong regulatory intervention.

6. Scope 2 and Scope 3 Emissions Are Under the Microscope

Historically, data center emissions accounting has focused narrowly on Scope 2—indirect emissions from electricity purchased. However, a growing number of scientific studies are shifting the spotlight to Scope 3 emissions, which include upstream supply chain factors such as semiconductor manufacturing, server transport, and construction materials. Figure 2 shows a typical data center’s GHG Scope 1, 2, and 3.

A comprehensive study by Ananth and Malige (2024) finds that Scope 3 emissions from hyperscale data centers can exceed Scope 2 emissions by a factor of two, particularly during the buildout phase. As AI systems require more advanced GPUs and faster networking components, the embodied carbon in hardware continues to rise.

This has serious implications for ESG-compliant investors and enterprise clients. With the European Union’s Corporate Sustainability Reporting Directive (CSRD) coming into effect, large companies operating in or with the EU must now disclose Scope 3 emissions, placing hyperscalers under tighter regulatory scrutiny.

7. AI as Both Problem and Solution

Ironically, AI itself can play a critical role in addressing the environmental impacts of its infrastructure. AI-powered monitoring systems, for instance, can optimize cooling operations in data centers, reducing energy consumption by up to 40 percent, as demonstrated by Google’s DeepMind. AI can also facilitate predictive maintenance, dynamic load balancing, and energy-efficient workload scheduling.

Innovations in data center design, such as liquid cooling and waste heat recovery, offer additional promise. In Nordic countries, waste heat from AI centers is now being repurposed to provide district heating to thousands of homes. This approach not only reduces energy waste but also provides social co-benefits.

Companies like Meta and Microsoft have also begun investing in closed-loop water systems to achieve net-zero water usage by 2030. These efforts, while commendable, are still exceptions rather than the norm.

8. Key Investment Implications

Immediate Risks (2024-2025)

- Stranded Assets: Traditional data centers (CPU) may become obsolete as AI workloads require 3x power density (GPU/TPU clusters). This suggests that older, low-density data centers may struggle to accommodate next-generation AI infrastructure and risk becoming stranded assets as client preferences shift.

- Energy Cost Volatility: As data centers become more energy-intensive (especially AI-focused), operators face increased risk from energy price volatility and margin compression—a significant concern in a world of rising electricity prices. About 40-60% of OpEx now energy-dependent, creating margin compression risk

- Regulatory Exposure: The EU’s Corporate Sustainability Reporting Directive (CSRD) specifically mandates disclosure of Scope 3 greenhouse gas emissions for covered entities, which includes large public EU companies and non-EU companies with significant EU business impacting the hyperscaler valuations.

Investment Opportunities

| Sector/Theme | Investment Opportunity | Risk Level | Timeline |

| Renewable Energy REITs | Data center power purchase agreements (PPAs) | Medium | 1-3 years |

| Cooling Technology | Liquid cooling, waste heat recovery | High | 2-5 years |

| Water Infrastructure | Closed-loop systems, advanced water treatment tech | Medium | 3-7 years |

Renewable Energy REITs & PPAs: Data centers’ huge power demands are driving investment in renewables and power purchase agreements (PPAs). This can be a moderate-risk, short-term (1-3 year) play as demand and regulatory pressure increase for clean energy[1].

Cooling Technology: As AI power density grows, traditional cooling won’t suffice. Investment in high-potential, but technically riskier, solutions like liquid cooling and capturing/reusing waste heat is expected to ramp up over the next 2-5 years[2].

Water Infrastructure: Efficient water management becomes critical as cooling requirements rise. Investment in closed-loop cooling systems and advanced water treatment tech is developing, with payoffs likely 3-7 years out[3].

Due Diligence Checklist

- Energy procurement strategy and PPA portfolio

- Water usage efficiency and local availability

- Scope 3 emissions reporting capability

- AI-ready infrastructure percentage

- Geographic diversification vs. climate risk

Floodlight Recommended Actions for typical data centers assessment.

- Screen existing tech holdings for data center exposure and energy efficiency

- Evaluate ESG funds for Scope 3 emissions reporting gaps

- Consider infrastructure plays in renewable energy and cooling technology

[1] https://netzeroinsights.com/resources/key-investment-trends-driving-data-center-sustainability-in-2025/

[2] https://www.bloomenergy.com/blog/powering-ai/

[3] https://fortune.com/asia/2025/07/22/data-centers-power-renewable-energy-brainstorm-ai-singapore/

References:

- Ananth, C., & Malige, L. K. (2024). The Impact of Artificial Intelligence on the Modern Data Center Industry. SSRN. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4930505

- Berger, A. (2025). Artificial Intelligence Data Centers and United States Based Hyperscalers. Johns Hopkins University. https://jscholarship.library.jhu.edu/items/8792d5a5-91a3-4179-bc73-61fcc2ae1879

- Bast, J., et al. (2022). Energy Profile of U.S. Data Centers

- Chen, D., Youssef, A., et al. (2024). Transforming the Hybrid Cloud for Emerging AI Workloads. arXiv. https://arxiv.org/abs/2411.13239

- Cloudscene. (2025). Global Data Center Directory

- Department of Energy. (2023). Data Center Energy Use Trends

- Ferreboeuf, H. (2023). Transitioning Towards Sustainable Digital Models. TU-Berlin.

- JLARC. (2017). Data Center Water Consumption in Virginia

- Lifset, R., et al. (2025). Environmental Law Review

- Masanet, E., et al. (2020). Recalibrating Global Data Center Energy Use Estimates

- Sickles, K., et al. (2024). Energy Inequities of Data Centers

- Zhou, Y. (2021). Global Data Volume Forecast